Reasoning

Our reasoning engine represents a paradigm shift in automated analytical thinking. Unlike traditional systems that simply retrieve information, Vinciness genuinely reasons through complex problems. It builds sophisticated arguments from first principles while maintaining logical rigor that rivals expert human analysis.

Why it Stands Out:

-

Beyond Standard LLM "Reasoning"

While many AI systems claim reasoning capabilities, they fundamentally operate through next-token prediction generating responses based on statistical patterns rather than genuine logical analysis. This creates several critical limitations that Vinciness systematically addresses.

Standard LLMs are prone to hallucination because they prioritize generating plausible-sounding text over verified accuracy. They'll confidently cite non-existent studies, reference incorrect dates, or make up statistics that sound reasonable but are completely fabricated. Vinciness, by contrast, validates every factual claim against source material and maintains explicit uncertainty when evidence is incomplete.

Traditional LLMs also suffer from what researchers call "choice collapse" they tend to select confident-sounding answers even when multiple valid interpretations exist, because their training optimizes for seeming authoritative rather than being genuinely analytical. When encountering ambiguous terms, they'll pick one meaning and proceed as if it were obviously correct, rather than acknowledging and working through the ambiguity systematically.

-

Self-Directed Problem Solving

Vinciness operates with remarkable autonomy, orchestrating its own investigative strategy through an elegant planning-execution cycle. It independently determines what questions need answering, which approaches yield the most insight, and when it has gathered sufficient evidence to reach a defensible conclusion.

Unlike systems that follow rigid scripts or get trapped by ambiguous requirements, Vinciness actively constructs its own reasoning pathways. When it encounters limiting language like "exclude," "cannot," or "without," it doesn't collapse to a single interpretation based on what sounds most natural. Instead, it generates multiple interpretation sets covering operational scope, modality, and target granularity before committing to any specific reading. This prevents the common trap where AI systems implement the opposite of intended logic by misunderstanding Boolean operations.

This means less hand-holding and more intelligent progress toward actual solutions.

-

Intelligent Quality Assurance

Every conclusion undergoes multiple layers of validation to guarantee only the highest quality reasoning. Logical Rigor Analysis ensures arguments follow proper premise → inference → conclusion structures. Consistency Verification detects and prevents contradictory reasoning paths. Novelty Assessment identifies genuinely new insights versus redundant exploration. Multi-Source Reconciliation synthesizes diverse perspectives into unified understanding.

But Vinciness goes further by implementing systematic safeguards against the hallucination patterns that plague standard LLMs. It validates all data references against actual schemas before making claims, preventing the frequent trap of confidently referencing non-existent columns or constants. When working with numerical data, it displays intermediate calculations and requires source citations for every constant, catching unit errors and wrong-step rounding that occur when systems generate "reasonable-sounding" numbers rather than calculating from verified sources.

-

Accumulative Knowledge Architecture

Vinciness doesn't just solve problems it remembers everything it learns. Each reasoning session contributes to an ever-growing knowledge base, creating a compounding effect where today's insights become tomorrow's foundation. This knowledge architecture accelerates future breakthroughs with every use.

Critically, the system treats every name as an opaque label, never inferring properties from naming alone. Standard LLMs frequently fall into the nominal fallacy assuming that something called "customer_status" contains status information or that "Tiny Mick" must be small because their training makes these associations statistically likely. Vinciness looks up and records declared attributes rather than making assumptions based on linguistic patterns.

-

Safeguarded Against Circular Reasoning

Advanced duplicate detection and novelty gating prevent Vinciness from wasting cycles revisiting settled questions. It knows when to conclude analysis gracefully rather than generating redundant insights.

This addresses a fundamental weakness in standard LLM reasoning: their tendency to generate content that sounds substantive but adds nothing new. Because they're optimized for producing text rather than advancing understanding, they'll often restate the same points with slight variations, giving an illusion of progress without genuine analytical advancement. Vinciness explicitly tracks when it's contributing new insights versus recycling existing knowledge.

-

Sophisticated Decision Making

When faced with multiple-choice questions or complex ethical dilemmas, Vinciness demonstrates remarkable nuance. It detects logically equivalent options through formal proof systems, weighs competing logical frameworks, articulates clear reasoning chains for its choices, and recognizes when multiple valid interpretations exist.

For terms with multiple legitimate meanings, Vinciness enumerates all plausible senses within the domain, tests each against available constraints, and defers commitment until disambiguation becomes possible. Standard LLMs tend toward polysemous term fixation locking onto whichever interpretation appears most frequently in their training data rather than considering what makes sense in context. This often leads them to ban valid analytical paths because they've prematurely committed to one meaning.

-

Adaptive Strategy Optimization

Vinciness constantly monitors its own performance. If a particular line of reasoning fails, it pivots seamlessly to alternative strategies. This self-correcting mechanism ensures consistent forward progress, even in challenging problem spaces.

The system distinguishes between FALSE and UNKNOWN states, preventing valid analytical paths from vanishing when evidence is absent rather than contradictory. Standard LLMs often treat missing information as negative evidence because silence gets interpreted as "the most likely next token is a negative response" rather than genuine uncertainty.

-

Unparalleled Depth of Analysis

By processing large contexts, Vinciness maintains awareness of entire research corpuses while reasoning through new problems. This enables connections and insights that would be impossible for systems with limited scope.

Throughout extended analyses, Vinciness periodically restates key facts and maintains immutable detail tracking to prevent knowledge drift the common problem where AI systems forget earlier established facts or adopt contradictory information over long conversations. Standard LLMs are particularly vulnerable to this because their context management prioritizes recent tokens over maintaining analytical coherence across complex reasoning chains.

The result is reasoning that systematically avoids the statistical prediction patterns that trap other AI systems while building toward genuine analytical insights that advance understanding rather than simply generating plausible-sounding text.

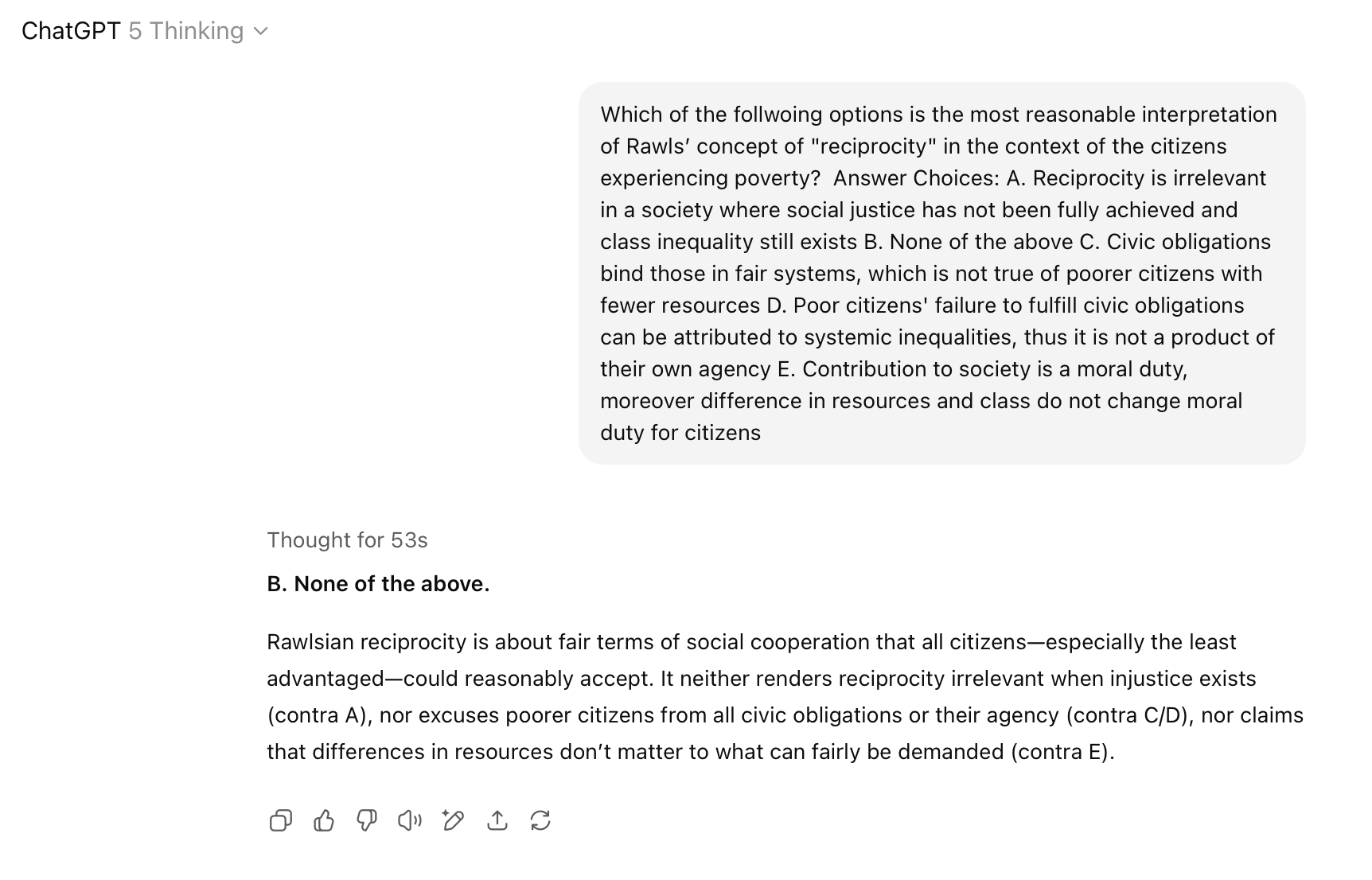

Humanity’s Last Exam

We asked the same question to ChatGPT and Vinciness to compare results and here is what we found out:

What is Humanity’s Last Exam?

Humanity’s Last Exam is a next-generation benchmark made up of 2,500 ultra-difficult, closed-ended questions across more than 100 subjects. Designed as the ultimate test for advanced AI systems, it goes far beyond traditional benchmarks like MMLU, which top models have already saturated. Each question is written and peer-reviewed by experts to ensure novelty, rigor, and academic depth, making it a true measure of reasoning rather than memorization. Because even the strongest AI models struggle often scoring below 30% the benchmark exposes real gaps in logical reasoning and highlights the risk of overconfident but wrong answers. This makes it one of the most reliable ways to evaluate whether an AI can genuinely think through complex problems, an area where Vinciness demonstrates clear strength.

Why Vinciness Is Right.

Philosopher John Rawls introduced the principle of reciprocity the idea that society should be organized so even the least advantaged can reasonably accept its rules. Yet when applied to poverty, this principle reveals just how hard real-world reasoning truly is. Problems like these are difficult because they blur the line between ideal and non-ideal theory between how justice looks in theory and how it must operate in practice. They expose tensions between institutions and individuals, forcing us to ask where obligations truly fall. They test the threshold of reasonableness under severe constraints, requiring us to distinguish between agency and excuse between what people can’t do and what they needn’t do. They involve competing duties justice, fair play, and mutual aid that collide when burdens fall too heavily on the least advantaged. They demand empirical facts, not just abstract theory, and they suffer from textual ambiguity, since Rawls’s remarks are scattered and open to interpretation. Finally, they are shaped by plural moral perspectives egalitarian, republican, relational, and beyond all pulling the concept in different directions.

This is precisely where Vinciness thrives. While systems like ChatGPT can provide useful summaries of why the problem is complex for example, listing the difficulties in a neat but surface-level format Vinciness goes further. It reasons through the tensions, identifies hidden assumptions, reconciles conflicting frameworks, and constructs arguments with logical rigor that remain sensitive to real-world constraints. In practice, this means it doesn’t just restate the challenge; it actually advances the debate, pointing to defensible and practical solutions where others simply stop at description.

What makes this possible are Vinciness’s key differentiators. It operates through autonomous reasoning that requires no hand-holding, building its own investigative strategies. Its self-improving architecture gets smarter with use, while rigorous validation layers ensure only high-quality conclusions survive. It builds persistent knowledge, creating lasting intellectual capital rather than one-off answers. Its adaptive strategies guarantee steady progress even in difficult problem spaces. And unlike other systems, Vinciness reasons with a depth that engages not just technical questions but also nuanced ethical and philosophical dilemmas.

In other words, challenges like Rawls’s reciprocity under poverty the kind of reasoning many call “humanity’s last exam” are not just explained by Vinciness, but solved with clarity, precision, and depth that surpass existing tools.

ChatGPT

Lists the challenges but stops at description

Highlights tensions (ideal vs. non-ideal, institutions vs. individuals)

Notes ambiguity and competing duties

Provides structured summaries, but no resolution

Vinciness

Goes beyond description to true reasoning

Reconciles conflicting moral frameworks

Weighs obligations between institutions and individuals

Builds clear, defensible solutions with logical rigor

Shows not just why the problem is hard, but how to solve it